🍿 3 min. read

GitHub recently released a feature that allows users to create a profile-level README to display prominently on their GitHub profile. This article walksthrough how to access this new feature. I'll also be sharing some fun GitHub profiles I've seen so far. I'd love it if you shared yours with me on Twitter @indigitalcolor.

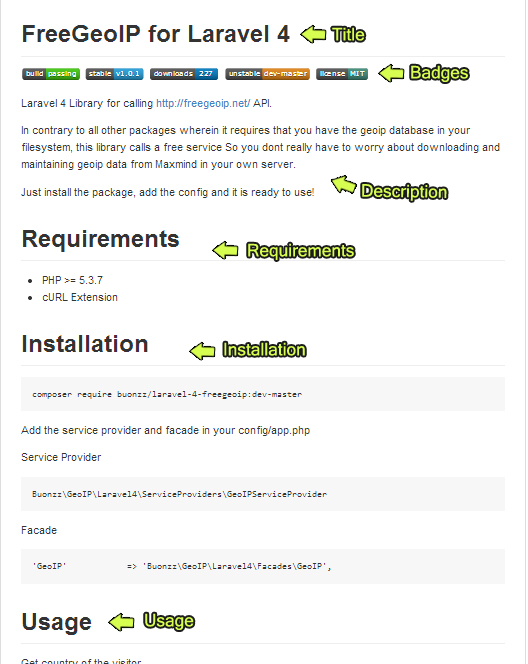

Curated list of Developer Github Profiles for your inspiration. Follow this guide: GitHub new feature to add README on your Profile. Contribute to this repository by opening a PR to this repository. Refer to the CONTRIBUTING file for direction. This repository was inspired from Developer Portfolios github. It looks like that GitHub fetch description meta tag randomly from somewhere in readme.MD, my question is if this can be controlled and defined somehow in readme.MD? I want to define what goes into meta title and meta description and see this in google results. A list of cool features of Git and GitHub. Go to file Code. Md index 88fcf 100644 - a/README. Github Profile README Generator - This tool provides an easy way to create github profile readme with latest addons like visitors count, github stats etc. Dynamic Profile Page On Github - Get dynamically generated list of your commits (of the repositories that the.

The above GIF shows what my README looks like at the time of this writing. You may notice I was recently selected to be GitHub star!

Table of Contents

Why READMEs?

The GitHub profile-level README feature allows more content than the profile bio, supports markdown which means you can play around with the content more visually (Did someone say GIFs!?) and the README is significantally more visible as it is placed above pinned repositories and takes up as much space above the fold of the webpage as you like.

A solid README is a core-component of well-documented software and often encourages collaboration by sharing helpful context with contributors. In my opinion, a profile-level README seems like a great extension of a convention a lot of GitHub users are already familiar with. If you're looking to make project-level READMEs more awesome and helpful check out matiassingers/awesome-readme for resources and examples of compelling READMEs.

How do I create a profile README?

The profile README is created by creating a new repository that’s the same name as your username. For example, my GitHub username is m0nica so I created a new repository with the name m0nica. Note: at the time of this writing, in order to access the profile README feature, the letter-casing must match your GitHub username.

Already have a repo-named username/username?

If you are interested in setting up a profile-level README then you can rename the repositoryor repurpose its existing README based on what makes the most sense in your particular situation.

Create a new repository with the same name (including casing) as your GitHub username: https://github.com/new

Create a README.md file inside the new repo with content (text, GIFs, images, emojis, etc.)

Commit your fancy new README!

- If you're on GitHub's web interface you can choose to commit directly to the repo's main branch (i.e.,

masterormain) which will make it immediately visible on your profile)

- If you're on GitHub's web interface you can choose to commit directly to the repo's main branch (i.e.,

Push changes to GitHub (if you made changes locally i.e., on your computer and not github.com)

Fun READMEs

The GitHub README profiles are written in Markdown which means you aren't just limited to texts and links, you can include GIFs and images. Need to brush up on Markdown Syntax? Check out this Markdown Cheatsheet.

hey, check out the new @github profile README! this is a really nice addition — I love that we can add some context (and/or nonsense) to our GitHub profiles now 😍

see mine: https://t.co/Cvrch1DVFD

thanks to @cassidoo for the heads up that this went live! pic.twitter.com/xMTeBgRLh0

It's not as creative as @sudo_overflow's readme, but here's what I came up with. I also plan on adding some text below the image with links to my resume, etc. pic.twitter.com/C6b8tNDo1z

— donavon 'wyld' west (@donavon) July 9, 2020Is this how we suppose use github's readme? pic.twitter.com/XvLvCUC6iD

— Pouya (@Saadeghi) July 9, 2020If you're really ambitious you can use GitHub actions or other automation like bdougieYO or simonw to dynamically pull data into your README:

Check it out. I made MySpace but on @github.https://t.co/p4DWP4DxRR - My list is power by a GitHub Action workflow 😏 pic.twitter.com/PN80mFCqOE

— bdougie on the internet (@bdougieYO) July 10, 2020Made myself a self-updating GitHub personal README! It uses a GitHub Action to update itself with my latest GitHub releases, blog entries and TILs https://t.co/Eve7FOrwYKpic.twitter.com/oJPXLtFdgM

— Simon Willison (@simonw) July 10, 2020Serverless functions can also be used to dynamically generate information (for example your current Spotify activity):

I embedded a @Spotify Now Playing widget in my @github profile README!

It's an SVG rendered on the fly via @vercel serverless function, included in the README via <img> tag.

Supremely over-engineered, but I discovered lots of fun hacks in the process.https://t.co/Z8TBE9WxRypic.twitter.com/wdKw0maPKp

I'm a huge proponent that folks should maintain a website they have complete ownership over (even if it's a no-code website solution) but this is tempting..

I just created my @github profile README as well with a bunch of badges. This is really a brilliant idea. We may no longer need to maintain our personal website. We can write blogs as issues, manage Wiki and task board, free traffic analytics and CI/CD. https://t.co/zSXZKT6a20pic.twitter.com/mK9OWXG9iH

— Yuan Tang (@TerryTangYuan) July 10, 2020hey, so we heard ya & are trying out a thing where you CAN have a readme on your @github profile.. @mikekavouras built it btw! re: https://t.co/UC6q3qHjjRpic.twitter.com/kB0kafgovY

— kathy ☁️ (@pifafu) May 27, 2020I've been inspired by the creative READMEs I've seen so far and am looking forward to seeing all kinds of profiles in the upcoming months.

This article was published on July 11, 2020.

Webmentions

482167What makes a good GitHub README? It’s a question almost every developer has asked as they’ve typed:

As far as we can tell, nobodyhasaclearanswerforthis, yet you typically know a good GitHub README when you see it. The best explanation we’ve found is the README Wikipedia entry, which offers a handful suggestions.

We set out to flex our data science muscles, and see if we could come up with an objective standard for what makes a good GitHub README using machine learning. The result is the GitHub README Analyzer demo, an experimental tool to algorithmically improve the quality of your GitHub README’s.

The complete code for this project can be found in our iPython Notebook here.

Thinking About the GitHub README Problem

Understanding the contents of a README is a problem for NLP, and it’s a particularly hard one to solve.

To start, we made some assumptions about what makes a GitHub README good:

- Popular repositories probably have good README’s

- Popular repositories should have more stars than unpopular ones

- Each programming language has a unique pattern, or style

With those assumptions in place, we set out to collect our data, and build our model.

Approaching the Solution

Step One: Collecting Data

First, we needed to create a dataset. We decided to use the 10 most popular programming languages on GitHub by the number of repositories, which were Javascript, Java, Ruby, Python, PHP, HTML, CSS, C++, C, and C#. We used the GitHub API to retrieve a count of repos by language, and then grabbed the README’s for the 1,000 most-starred repos per language. We only used README’s that were encoded in either markdown or reStructuredText formats.

We scraped the first 1,000 repositories for each language. Then we removed any repository the didn’t have a GitHub README, was encoded in an obscure format, or was in some way unusable. After removing the bad repos, we ended up with — on average — 878 README’s per language.

Step Two: Data Scrubbing

We needed to then convert all the README’s into a common format for further processing. We chose to convert everything to HTML. When we did this, we also removed common words from a stop word list, and then stemmed the remaining words to find the common base — or root — of each word. We used NLTK Snowball stemmer for this. We also kept the original, unstemmed words to be used later for making recommendations from our model for the demo (e.g. instead of recommending “instal” we could recommend “installation”).

This preprocessing ensured that all the data was in a common format and ready to be vectorized.

Step Three: Feature Extraction

Now that we have a clean dataset to work with, we need to extract features. We used scikit-learn, a Python machine learning library, because it’s easy to learn, simple, and open sourced.

We extracted five features from the preprocessed dataset:

- 1-grams from the headers (<h1>, <h2>, <h3>)

- 1-grams from the text of the paragraphs (<p>)

- Count of code snippets (<pre>)

- Count of images (<img>)

- Count of characters, excluding HTML tags

Cool Github Readme Profile

To build feature vectors for our README headers and paragraphs, we tried TF-IDF, Count, and Hashing vectorizers from scikit-learn. We ended up using TF-IDF, because it had the highest accuracy rate. We used 1-grams, because anything more than that became computationally too expensive, and the results weren’t noticeably improved. If you think about it, this makes sense. Section headers tend to be single words, like Installation, or Usage.

Since code snippets, images, and total characters are numerical values by nature, we simply casted them into a 1×1 matrix.

Step Four: Benchmarking

We benchmarked 11 different classifiers against each other for all five features. And, then repeated this for all 10 programming languages. In reality, there was an 11th “language,” which was the aggregate of all 10 languages. We later used this 11th (i.e. “All”) language to train a general model for our demo. We did this to handle situations where a user was trying to analyze a README representing a project in a language that wasn’t one of the top 10 languages on GitHub.

We used the following classifiers: Logistic Regression, Passive Aggressive, Gaussian Naive Bayes, Multinomial Naive Bayes, Bernoulli Naive Bayes, Nearest Centroid, Label Propagation, SVC, Linear SVC, Decision Tree, and Extra Tree.

We would have used nuSVC, but as Stack Overflow can explain, it has no direct interpretation, which prevented us from using it.

In all, we created 605 different models (11 classifiers x 5 features x 11 languages — remember, we created an “All” language to produce an overall model, hence 11.). We picked the best model per feature per programming language, which means we ended up using 55 unique models (5 features x 11 languages).

View the interactive chart here. Find the detailed error rates broken out by language here.

Some notes on how we trained:

- Before we started, we divided our dataset into a training set, and a testing set: 75%, and 25%, respectively.

- After training the initial 605 models, we benchmarked their accuracy scores against each other.

- We selected the best models for each feature, and then we saved (i.e. pickled) the model. Persisting the model file allowed us to skip the training step in the future.

To determine the error rate of the models, we calculated their mean squared errors. The model with the least mean squared errors was selected for each corresponding feature, and language – 55 models were selected in total.

Step Five: Putting it All Together

With all of this in place, we can now take any GitHub repository, and score the README using our models.

For non-numerical features, like Headers and Paragraphs, the model tries to make recommendations that should improve the quality of your README by adding, removing, or changing every word, and recalculating the score each time.

For the numerical-based features, like the count of <pre>, <img> tags, and the README length, the model incrementally increases and decreases values for each feature. It then re-evaluates the score with each iteration to determine an optimized recommendation. If it can’t produce a higher score with any of the changes, no recommendation is given.

Cool Github Readme

Try out the GitHub README Analyzer now.

Conclusion

Some of our assumptions proved to be true, while some were off. We found that our assumption about headers and the text from paragraphs correlated with popular repositories. However, this wasn’t true for the length of a repository, or the count of code samples and images.

The reason for this may stem from the fact that n-gram based features, like Headers and Paragraphs, train on hundreds or thousands of variables per document. Whereas numerical features only trains on one variable per document. Hence, ~1,000 repositories per programming language were enough to train models for n-gram based features, but it wasn’t enough to train decent numerical-based models. Another reason might be that numerical-based features had noisy data, or the dataset didn’t correlate to the number of stars a project had.

When we tested our models, we initially found it kept recommending us to significantly shorten every README (e.g. it recommended that one README get shortened from 408 characters down to 6!), remove almost all the images and code samples. This was counterintuitive, so after plotting the data, we learned that a big part of our dataset had zero images, zero code snippets, or was less than 1,500 characters.

This explains why our model behaved so poorly at first for the length, code sample, and image features. To account for this, we took an additional step in our preprocessing and removed any README that was less than 1,500 characters, contained zero images, or zero code samples. This dramatically improved the results for images and code sample recommendations, but the character length recommendation did not improve significantly. In the end, we chose to remove the length recommendation from our demo. Professional graphics tablet for mac.

Think you can come up with a better model? Check out our iPython Notebook covering all of the steps from above, and tweet us @Algorithmia with your results

Below is the numerical feature distribution for images, code snippets, and character length all README’s. Find the detailed histograms broken out by language here.

Best Github Readme Template

Arize AI partners with Algorithmia to enable better MLOps and observability for enterprises